Researchers from the GrapheneX-UTS Human-centric Artificial Intelligence Centre have developed a portable, non-invasive system that can decode silent thoughts and turn them into text.

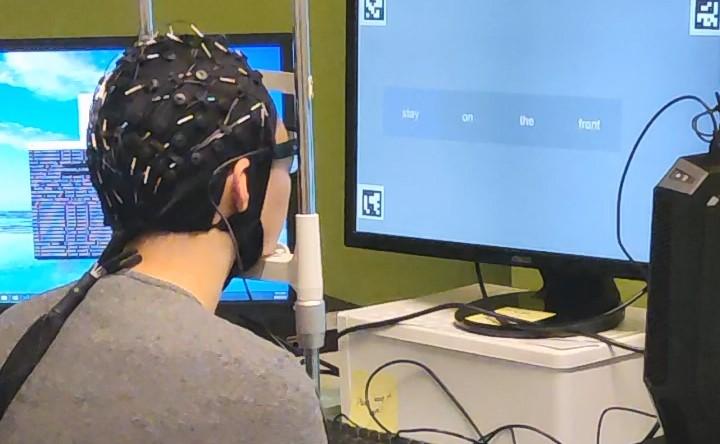

UTS researcher tests new mind-reading technology. Image: UTS

In a world-first, researchers from the GrapheneX-UTS Human-centric Artificial Intelligence Centre at the University of Technology Sydney (UTS) have developed a portable, non-invasive system that can decode silent thoughts and turn them into text.

The technology could aid communication for people who are unable to speak due to illness or injury, including stroke or paralysis. It could also enable seamless communication between humans and machines, such as the operation of a bionic arm or robot.

The study has been selected as a spotlight paper at the NeurIPS conference, a top-tier annual meeting that showcases world-leading research on artificial intelligence and machine learning, held in New Orleans.

The research was led by Distinguished Professor CT Lin, Director of the GrapheneX-UTS HAI Centre, together with first author Yiqun Duan and fellow PhD candidate Jinzhou Zhou from the UTS Faculty of Engineering and IT.

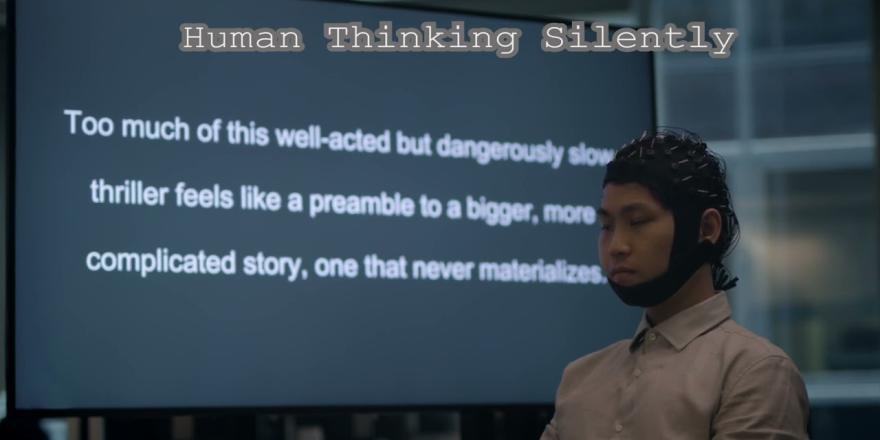

In the study participants silently read passages of text while wearing a cap that recorded electrical brain activity through their scalp using an electroencephalogram (EEG). A demonstration of the technology can be seen in this video.

Our brain GPT research is about decoding information from the brain without invasive technology. This will redefine the technologies we see today. By connecting our multitask EEG encoder with large language models, our brain has struck a major breakthrough in decoding coherent and readable sentences from EEG signals. Our work has been accepted by the NI p s conference as the spotlight paper in 2024.

Good afternoon. I hope you're doing well. I'll start with a cappuccino, please with an extra shot of espresso.

To capture information directly from raw EEG waves. We designed an encoder to process waveform EEG signals. After training. This encoder allows more convenient decoding of EEG signals without the need for eye tracking devices or pre processing processes.

Yes, I'd like a bowl of chicken soup please

yes, a bowl of beef soup.

The fairy tale was filled with magical adventures in a charming enchanted forest

the fairy tale had lots of magic a journey forest magic

it's solid and affecting and exactly as thought provoking as it should be.

It's believable, is one provoking as the sounds be.

Too much of this well acted but dangerously slow thriller feels like a preamble to a bigger, more complicated story. One that never materializes.

Too much of movie made film totally predictable is like a be L E to a much more serious one that quit a izes.

The movie's plot is almost entirely witless and inane, carrying every gag two or three times beyond its limit to sustain a laugh. The film is most is this as one less the aim and no joke and or three times. Its point be the laugh.

High quality film with twist

good flim twists interesting

if you already like this sort of thing this is that sort of thing all over again

if you know the movie of thing you is a kind of things all over again.

Which juice do you like best?

The EEG wave is segmented into distinct units that capture specific characteristics and patterns from the human brain. This is done by an AI model called DeWave developed by the researchers. DeWave translates EEG signals into words and sentences by learning from large quantities of EEG data.

“This research represents a pioneering effort in translating raw EEG waves directly into language, marking a significant breakthrough in the field,” said Distinguished Professor Lin.

“It is the first to incorporate discrete encoding techniques in the brain-to-text translation process, introducing an innovative approach to neural decoding. The integration with large language models is also opening new frontiers in neuroscience and AI,” he said.

Previous technology to translate brain signals to language has either required surgery to implant electrodes in the brain, such as Elon Musk’s Neuralink, or scanning in an MRI machine, which is large, expensive, and difficult to use in daily life.

These methods also struggle to transform brain signals into word level segments without additional aids such as eye-tracking, which restrict the practical application of these systems. The new technology is able to be used either with or without eye-tracking.

The UTS research was carried out with 29 participants. This means it is likely to be more robust and adaptable than previous decoding technology that has only been tested on one or two individuals, because EEG waves differ between individuals.

The use of EEG signals received through a cap, rather than from electrodes implanted in the brain, means that the signal is noisier. In terms of EEG translation however, the study reported state-of the art performance, surpassing previous benchmarks.

“The model is more adept at matching verbs than nouns. However, when it comes to nouns, we saw a tendency towards synonymous pairs rather than precise translations, such as ‘the man’ instead of ‘the author’,” said Duan.

“We think this is because when the brain processes these words, semantically similar words might produce similar brain wave patterns. Despite the challenges, our model yields meaningful results, aligning keywords and forming similar sentence structures,” he said.

The translation accuracy score is currently around 40% on BLEU-1. The BLEU score is a number between zero and one that measures the similarity of the machine-translated text to a set of high-quality reference translations. The researchers hope to see this improve to a level that is comparable to traditional language translation or speech recognition programs, which is closer to 90%.

The research follows on from previous brain-computer interface technology developed by UTS in association with the Australian Defence Force that uses brainwaves to command a quadruped robot, which is demonstrated in this ADF video.